Abstract

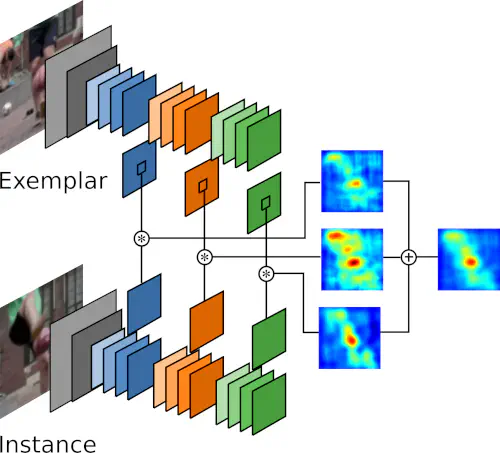

Siamese networks have been successfully utilized to learn a robust matching function between pairs of images. Visual object tracking methods based on siamese networks have been gaining popularity recently due to their robustness and speed. However, existing siamese approaches are still unable to perform on par with the most accurate trackers. In this paper, we propose to extend the SiamFC tracker to extract features at multiple context and semantic levels from very deep networks. We show that our approach effectively extracts complementary features for siamese matching from different layers, which provides a significant performance boost when fused. Experimental results on VOT and OTB datasets show that our multi-context tracker is comparable to the most accurate methods, while still being faster than most of them. In particular, we outperform several other state-of-the-art siamese methods.