Abstract

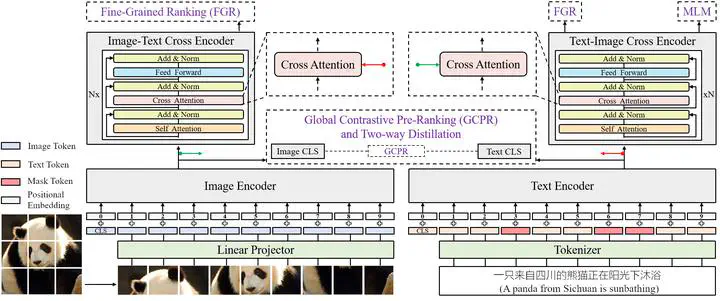

Vision-language pre-training (VLP) on large-scale datasets has shown premier performance on various downstream tasks. In contrast to plenty of available benchmarks with English corpus, large-scale pre-training datasets and downstream datasets with Chinese corpus remain largely unexplored. In this work, we build a large-scale high-quality Chinese Cross-Modal Benchmark named CCMB for the research community, which contains the currently largest public pre-training dataset CCMB-Corpus and five human-annotated fine-tuning datasets for downstream tasks. CCMB-Corpus contains 250 million images paired with 750 million text descriptions, plus two of the five fine-tuning datasets are also currently the largest ones for Chinese cross-modal downstream tasks. Along with the CCMB, we also develop a VLP framework named PANDA, providing a strong baseline for Chinese cross-modal learning. With the CCMB-Corpus and the PANDA VLP framework, we achieve state-of-the-art performance on twelve downstream datasets from five broad categories of tasks including image-text retrieval, image-text matching, image caption, text-to-image generation, and zero-shot image classification.