RAPIDFlow: Recurrent Adaptable Pyramids with Iterative Decoding for Efficient Optical Flow Estimation

Abstract

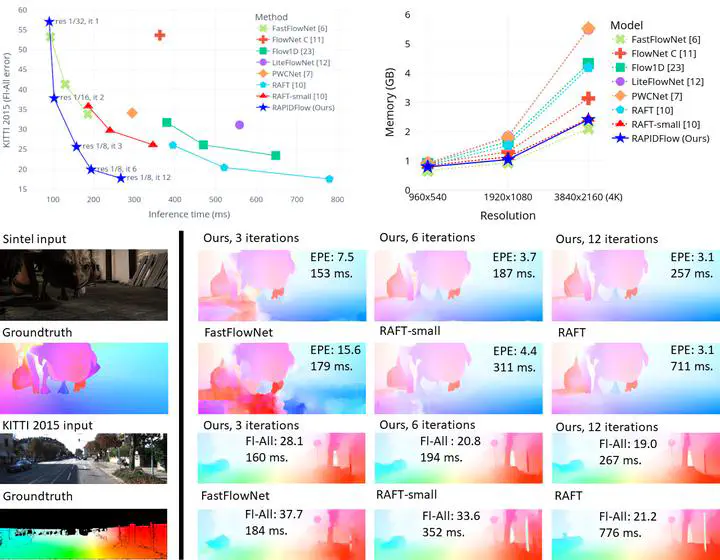

Extracting motion information from videos with optical flow estimation is vital in multiple practical robot applications. Current optical flow approaches show remarkable accuracy, but top-performing methods have high computational costs and are unsuitable for embedded devices. Although some previous works have focused on developing low-cost optical flow strategies, their estimation quality has a noticeable gap with more robust methods. In this paper, we develop a novel method to efficiently estimate high-quality optical flow in embedded devices. Our proposed RAPIDFlow model combines efficient NeXt1D convolution blocks with a fully recurrent structure based on feature pyramids to decrease computational costs without significantly impacting estimation accuracy. The adaptable recurrent encoder produces multi-scale features with a single shared block, which allows us to adjust the pyramid length at inference time and make it more robust to changes in input size. Also, it enables our model to offer multiple tradeoffs between accuracy and speed to suit different applications. Experiments using a Jetson Orin NX embedded system on the MPI-Sintel and KITTI public benchmarks show that RAPIDFlow outperforms previous approaches by significant margins at faster speeds.